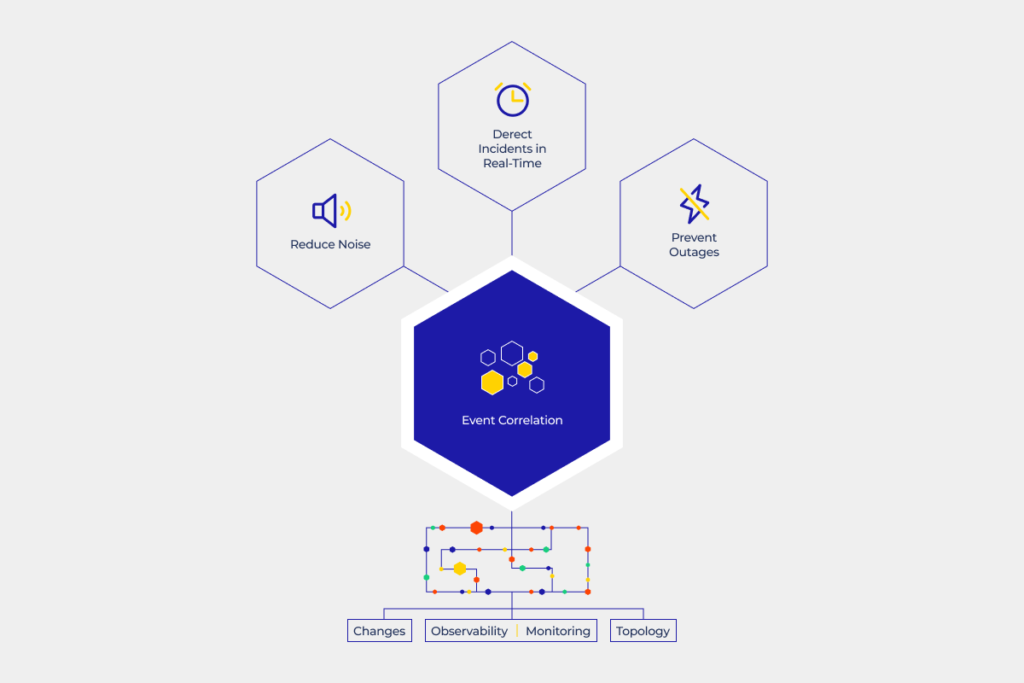

What is Event Correlation?

For those who are unfamiliar, event correlation is the practice of interpreting and evaluating the thousands of events that can be generated by various monitoring tools if infrastructure or an application fails. Instead of missing or disregarding information because they are overwhelmed by “alert storms,” IT Operations teams may use these tools and the insights they offer to understand and handle issues quickly.

Understanding the connections between events that take place in the IT environment is done through the process of event correlation. It enables IT operations management to understand the numerous events that occur and to pinpoint those that call for immediate ITOps team action or further inquiry.

An infrastructure monitoring tool would undoubtedly overlook higher-level connections that an AIOps tool can find by analyzing unstructured data. Some go even further than IT Ops and absorb data from streams like social media, allowing businesses to comprehend when their consumers or brand are harmed fully.

Events are just the occurrences that take place throughout everyday routines. Most incidents are normal. A virtual machine turning on in response to an increase in workload; a parent device talking with devices or apps downstream; a user logging into a workstation. Events in IT operations can be divided into three groups:

- Informational or regular events are normal and only demonstrate that your network’s infrastructure is operating as intended;

- Exceptions happen when a device, service, or application operates outside of its intended parameters, suggesting serious performance deterioration or failure.

- Warnings are events produced when a service or device exhibits warning indications or unusual behavior.

Every event has additional information to it that can be used to assess the condition of a network, and event correlation is the method used to assess that condition. A big company’s IT estate can produce thousands of events at any given time thanks to today’s broad, sophisticated networks. This presents a challenge for IT operations teams if they lack the tools with the pace and intelligence to keep up.

The ability to differentiate between occurrences that are actually related and those that are just concurrent or coincidental is required for event correlation to be truly successful. AIOps capabilities are needed for that kind of data analysis to handle the task’s complexity and quantity.

Learn more about the event correlation in this guide:

- Event Correlation in Integrated Service Management

- The Process of Event Correlation

- Types of Events in Event Correlation

- Event Correlation KPI

- Event Correlation Use Cases

- Event Correlation Approaches and Techniques

- Importance of Event Correlation ☝️

- Benefits of Event Correlation

- AI-Driven Event Correlation

- Event Correlation in AIOps

- Analyst Recommendations on AIOps

- Misconceptions about Event Correlation

- How to Choose the Ideal Event Correlation Tool for Your Business

- Why Companies Choose Acure For Event Correlation

Event Correlation in Integrated Service Management

Event correlation is growing to become an important part of integrated service management. An integral part of integrated service management is event correlation. It’s a well-known way to use a collection of standardized techniques to manage IT operations as a service.

In the early 1980s, several approaches developed by the artificial intelligence and database industries were deployed to network element management to analyze alarms when event correlation was first utilized in integrated management. Today, it is used for various purposes, including identifying wireless network faults, monitoring the performance of non-self-aware devices in network systems, identifying firewall intrusions, etc.

The six main processes that make up integrated service management include change management, service level management, operations management, configuration management, incident management, and quality management. Event correlation is a part of incident management but affects all six processes somehow.

A system’s monitoring generates data regarding events that occur. The volume of event data increases along with the complexity of an enterprise’s IT systems, making it tougher to make sense of this information stream. Problems arise because of:

- Changes in the arrangement of networks, devices, and connections, their relationships with each other;

- Combining software, computer resources, and cloud services;

- Practicing concepts like virtualized computing, decentralization, and processing of growing data volumes;

- Application addition, removal, updating, and integration with legacy systems.

The number of notifications is too high for IT operations employees, DevOps teams, and network operations center (NOC) administrators to keep up with, making it impossible to identify issues and outages before they impact important back-end systems or revenue-generating apps and services. These elements increase the chance that mishaps and outages will negatively affect the company’s bottom line.

The event correlation software will solve this problem for them. Automation and software tools are called event correlators. Event correlators accept a stream of event management data automatically created from all over the controlled environment, are key components of IT event correlation.

The correlator examines these monitoring alerts using AI algorithms to arrange events into groups that may be compared to information about system modifications and network architecture to determine the root of the issue and the best course of action.

The Process of Event Correlation

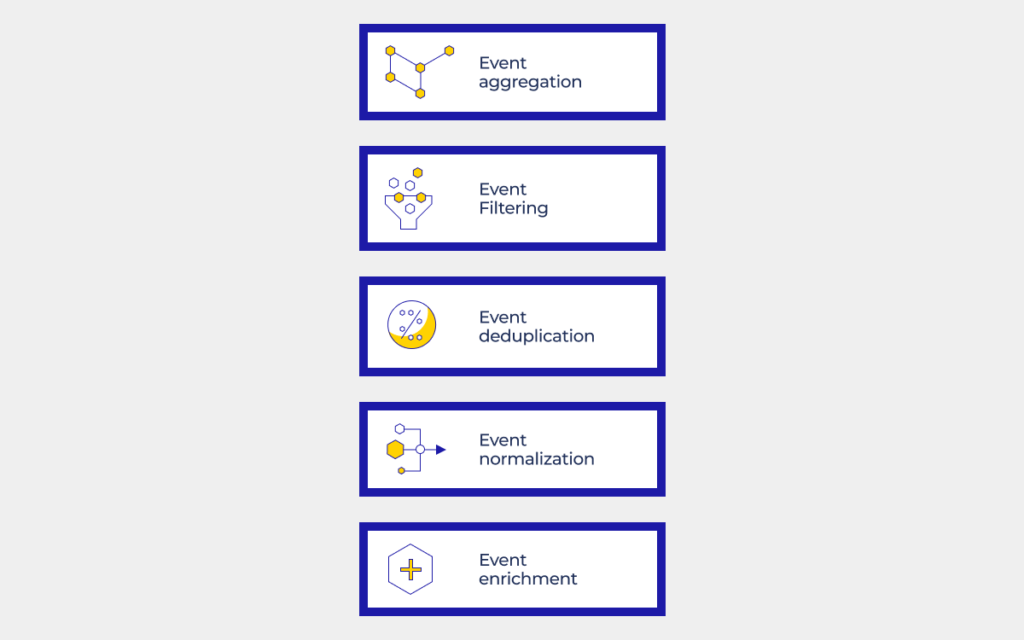

In addition to monitoring events, you should implement automated procedures to identify connections between various complex occurrences. The steps that make up the full event correlation process are usually as follows:

1. Aggregation: Infrastructure monitoring data is gathered and delivered to the correlator through various devices, programs, instruments for monitoring, and trouble-ticketing systems.

2. Filtering: User-defined parameters, such as source, timeframe, or event level, are used to filter events. Alternatively, this step could be carried out before aggregation.

3. Deduplication: The technology locates repeated events brought by a single problem. Duplication can occur for various reasons (e.g., 1000 individuals get the same error message, generating 1000 different alerts). Despite numerous notifications, there is usually just one problem that needs to be solved.

4. Normalization: Normalization uniformizes the data’s format so that the event correlation software’s AI algorithm can understand all the data’s sources similarly.

5. Root cause analysis: Event connections are finally examined to identify the root cause of the event in the most complicated step of the process, the root cause analysis. (For instance, events on one device are investigated to identify their effect on all other devices in the network.)

The number of events that originally needed to be correlated will now only be a small subset of those that do. This will result in a response from some event correlation tools, such as a suggestion for additional investigation, escalation, or automatic remediation, enabling IT managers to perform troubleshooting duties more effectively.

Types of Events in Event Correlation

There are a few basic types of event correlations, even though many organizations correlate various types of events depending on their unique IT environments and business requirements:

System events: These events reflect unusual shifts in the condition of the system’s resources. System events include things like a full disk or a heavy CPU load.

Network events: Network events show the functionality and health of switches, routers, terminals, and other network elements. They also show network traffic when it exceeds certain thresholds.

Operating system events: These events, which represent changes in the interaction between hardware and software, are produced by operating systems like Windows, Linux, Android, and iOS.

Database events: These events explain to analysts and administrators how data is read, saved, and changed in databases.

Application events: Events produced by software applications can shed light on how well an application is doing.

Web server events: Events related to the hardware and software that provide web page content are referred to as web server events.

User events: These are produced by synthetic tracking or real-user monitoring systems and show infrastructure performance as seen by the user.

Other Events: Synthetic checks, also known as probes, that examine functioning from the outside, are another type of event. Client telemetry and real-user monitoring produce particular events as users engage with the service.

Event Correlation KPI

Compression is the main key performance indicator (KPI) in event correlation. The KPI measures the proportion of events linked to fewer incidents and is expressed as a percentage.

Finding all events connected to a specific issue is the main objective of event correlation. Events that result from the primary issue will occur, with symptoms as the primary failure has an effect on secondary components. When operators completely comprehend the relationship, they can handle both the cause and the symptoms.

A compression percentage that is close to 100 percent is ideal. However, in practice, this is unachievable because as incidents approach that threshold, accuracy is sacrificed by the compression rates. This implies they misinterpret events as coming from the same problem or fail to see how one issue is connected to another. On the other hand, putting accuracy first causes the compression rate to drop.

Software for event correlation does not compute the accuracy. For instance, firm A might have events that are extremely different from firm B’s, and each organization may value various things more than the other. Therefore, it is practically difficult to determine the accuracy of the link between the two. Instead, customers evaluate accuracy as a soft, qualitative KPI using spot checks and business value analysis.

Event correlation professionals advise that businesses making this decision should aim for the highest compression rate possible without compromising accuracy and business value. This often results in a compression rate of between 70 and 85 percent. However, in other circumstances, higher correlation values of 85% or even 95% are not uncommon.

You may improve the efficiency of your company’s event management efforts by using analytics to gain insights from event correlation software into other event-driven metrics. You must consider raw event quantities and improvements brought on by deduplication and filtering in order to do this. Analyze false-positive rates, signal-to-noise ratios, and enhancement statistics. To be more proactive in avoiding problems, you can also consider event frequency in terms of the most prevalent cause of hardware and software failures.

Good event correlation can lead to other metrics. These metrics, often found in IT service management, are designed to assess how engineers, DevOps workers, service teams, and automated repairs manage these situations. One of them is a set of KPIs known as MTTx since they all begin with the acronym MTT, which stands for “mean time to.” These consist of:

- MTTR: Mean Time to Recovery, Mean Time to Respond, Mean Time to Restore, Mean Time to Repair, and Mean Time to Resolve. The average length of time it takes from the time an incident report is first filed until the event is resolved and marked closed is known as MTTR. The measurement considers both the time spent testing and fixing the problem.

- MTTA (Mean Time To Acknowledge): MTTA is the period of time between the activation of an alarm and the start of problem-solving. This indicator is helpful for monitoring the response of your staff and the performance of your alert system.

- MTTF (Mean Time To Failure): It is the average time between a technology product’s irreparable breakdowns. The computation is used to evaluate how long a system will normally last, whether the latest version of a system is functioning better than the previous version, and to advise clients about predicted lifetimes and the best times to arrange system check-ups.

- MTTD (Mean Time To Detect): The mean time to detect metric measures the time an IT deployment has a problem before the relevant stakeholders are aware of it. Users experience IT disruptions for a shorter period of time when the MTTD is shorter.

- MTBF (Mean Time Before Failure): The average time between system failures is the mean time between failures. MTBF is an essential maintenance parameter to assess equipment design, performance, and security, particularly for important assets.

- MTTK (Mean Time To Know): The mean time to fix an issue is the interval between issue detection and cause identification. In other words, MTTK is the amount of time required to determine the cause of a problem.

Examine the raw event volume for event management metrics, then take note of the reductions brought about by deduplication and filtering. Use the percentage of alerts that were enhanced and the level of enhancement, signal-to-noise ratio, or false-positive percentage for event enrichment data. For locating noise and enhancing actionability, accurate event frequency is helpful. It is also beneficial to consider the overall monitoring coverage in terms of the proportion of events started by monitoring.

Event Correlation Use Cases

Event correlation is essentially a method that links different events to recognizable patterns. If certain patterns pose a security risk, a response can be ordered. Once the data is indexed, event correlation can also be done. Among the most significant use cases of event correlation are:

Leading Airline ✈️

A major American airline was aware that even a small service interruption may result in millions of dollars in fuel wastage and lost revenue. The business employed numerous monitoring systems in an effort to maintain high uptime. However, they were fragmented, and the processes for incident detection and resolution were manual. The carrier introduced an AI-driven event correlation solution after first rationalizing and modernizing its monitoring technologies. Centralized monitoring, fewer incident escalations, and a 40% reduction in MTTR were all advantages of using event correlation.

Intruder Detection 🕵️

Let’s imagine an employee account hasn’t been used in years. The logs suddenly show a large number of login attempts. In a short while, the account might start executing unusual commands. The security team might declare that an attack is ongoing using event correlation.

Let’s assume that after numerous failed attempts to log in, one did succeed. The system classifies this incident as “curious” in the correlation. Then we discovered that a system port had been checked fifteen minutes earlier. Now we see that both the port scan IP address and the login attempts share the same IP address. Here, the relationship between the occurrences alerts us by designating the significance of the stated event as being of significant concern.

We would need to rely on luck than on expertise if we tried to locate these occurrences using merely a manual correlation. But with event correlation, you can identify and resolve the issue in no time.

Financial SaaS Provider For Businesses 🏦

Only 5% of events could be successfully resolved by this enterprise software as a service provider’s level one service team. The company particularly suffered as alert volume increased 100-fold while processing payroll on Friday. The level one team improved its resolution rate by 400%, cut MTTA by 95%, and cut MTTR by 58% in the first 30 days by applying AI-based event correlation.

Retail Chain 🛍️

A national home improvement retailer had prolonged outages at its stores because point-of-sale activities were not being correlated. With event correlation, both the overall and average outage durations decreased by a combined 65 percent. The organization found that a high frequency of alerts, some of which were meaningless, made it difficult for the network operations center to identify important problems and caused resolution to be delayed. Major incidents decreased by 27% with a better event correlation solution, while root cause analysis improved by 226% and MTTR decreased by 75%.

Sporting Goods Manufacturer 👟

Even after adopting some event correlation techniques, this large athletic shoe and clothing manufacturer was still overrun with alert information from its IT monitoring. The organization significantly increased its capacity to recognize major situations, take swift action, and do precise correlations by switching to a machine learning-based system. Its MTTA decreased from 30 minutes to 1 minute in less than 30 days as a result.

Event Correlation Approaches and Techniques

Event correlation techniques concentrate on establishing links between event data and determining causality by examining event features including the time, place, procedures, and data type. Today, AI-enhanced algorithms play a significant role in identifying these connections and trends as well as the root cause of issues. Here is a summary:

- Time-Based Event Correlation: This method looks for connections between the timing and order of events by analyzing what took place just before or concurrently with an event. For correlation, you can specify a time window or latency requirement.

- Rule-Based Event Correlation: Using specified values for variables like transaction type or user city, this method compares events to a rule. This method can be time-consuming and ultimately unsustainable because a new rule must be written for each variable.

- Pattern-Based Event Correlation: The time-based and rule-based strategies are combined in pattern-based event correlation, which looks for events that match a predetermined pattern without requiring the values of individual variables to be specified. Pattern-based event uses machine learning to improve the event correlation tool but is significantly less laborious than rule-based. The correlation program continuously increases its understanding of novel patterns with the use of machine learning.

- Rule-Based Approach: According to a predetermined set of rules, the rule-based method correlates events. The rule-processing engine examines the data until it reaches the desired state, taking into account the outcomes of each test and the interactions of the system events.

- Codebook-Based Approach: The codebook-based strategy is comparable to the rule-based strategy, which aggregates all events. It stores a series of events in a codebook and correlates them. Compared to a rule-based system, this approach executes more quickly since there are less comparisons made for each event.

- Topology-Based Event Correlation: This method is based on network topology, which is the physical and logical configuration of equipment, such as servers and hubs, as well as the nodes that make up a network, as well as knowledge of how those nodes are connected to one another. Users can more easily visualize incidents in relation to their topology using this technique because it maps events to the topology of impacted nodes or applications.

- Domain-Based Event Correlation: This method connects events using event data gathered from monitoring systems that concentrate on a particular area of IT operations. Some event correlation tools perform cross-domain or domain-agnostic event correlation by gathering data from all monitoring tools.

- History-Based Event Correlation: This technique looks for similarities between recent events and past occurrences. A history-based correlation is comparable to a pattern-based correlation in this regard. History-based correlation is “dumb” in that it can only link occurrences by contrasting them with similar events in the past. Pattern-based systems are adaptable and dynamic.

- Codebook-Based Approach: The codebook-based strategy is comparable to the rule-based strategy, which aggregates all events. It stores a series of events in a codebook and correlates them. Compared to a rule-based system, this approach executes more quickly since there are fewer comparisons made for each event.

Importance of Event Correlation ☝️

Some events among the network’s thousands of daily events are more significant than others. A server may have had a brief increase in demand, a disk drive may be beginning to fail, or a business service you depend on may be responding slowly. Without event correlation, it might be difficult to identify the problem. Perhaps you won’t even realize it until it’s too late.

Like that tenaciously determined investigator, event correlation software can sort through the signals and draw the connections required to correlate incidents to events quickly and to understand better what constitutes an issue and what constitutes a sign of a problem. Prioritizing and resolving incidents is a crucial step.

Event correlation software completes this work more quickly and accurately than humans. Even so, certain legacy products from the past struggle to function in today’s cutting-edge IT environments. Because of this, more businesses are using AIOps for IT operations.

Benefits of Event Correlation

With the help of a series of related events, event correlation provides comprehensive context and logical analysis. Security analysts can then carefully decide how to respond and investigate in the future.

This entails using user-defined rules to transform unprocessed data into actionable alerts, alarms, and reports. The necessary course of action can then be taken. The following are some advantages of applying event correlation techniques:

- Real-time threat visibility: IT departments can benefit from active event correlation and analysis to quickly identify dangers. Business is impacted by errors, security breaches, and operational problems. These can be effectively avoided instead.

- Lowers the cost of operation: Tools for event correlation automate tasks like the analysis of extensive workflows to lower the number of pertinent warnings. So, the IT department can focus more on fixing immediate threats and spend less time attempting to make sense of it all.

- Monitoring for network security: The network could always be looked over. Impact failures, like those that impact business services, can also be found and fixed.

- Better time management: Modern event correlation tools are user-friendly and effective, thus fewer resources are required. Additionally, using SIEM technologies for event correlation and analysis can save a significant amount of time.

- Continuous compliance reports: There may be different levels of security and networked system compliance required by federal, state, and municipal authorities. Techniques for event correlation can be utilized to guarantee continuous monitoring of all IT infrastructures. The actions required to mitigate such risks can then be detailed in reports that describe security-related threats and incidents.

Event correlation approaches are designed for event detection, interpretation, and control action assignment. The value of correlation intelligence will keep growing as data complexity rises.

AI-Driven Event Correlation

Assembling and analyzing log data from many network applications, processes, and devices is made possible by the event correlation in SIEM solutions. This feature ensures that security risks and hostile activity patterns in business networks will be found that would otherwise go undetected.

Many firms are using a blend of artificial intelligence and human intelligence to increase the accuracy of event correlation. They have come to the conclusion that no strategy can be successful on its own. The employment of SIEM solutions that contain correlation engines, algorithms for machine learning, and artificial intelligence consequently serves as a before and after example of cybersecurity advancements.

Event Correlation in AIOps

Initially, a procedure that required human engineers and developers, things started to change around 2010. The first significant development in event correlation was the introduction of statistical analysis and visualization.

Event correlation systems can now automatically create new correlation patterns by learning from event data thanks to machine learning and deep learning. This was the first time artificial intelligence had been used to correlate events.

The term “AIOps” was first used by Gartner analysts in 2016. Big data management and anomaly detection are two additional use cases for AIOps that go beyond event correlation. In 2018, Gartner listed the following as the primary duties of an AIOps platform:

- Incorporating data from several sources, irrespective of type or vendor

- Real-time analysis being carried out at the site of intake

- Analyzing historical data from stored sources

- Utilizing machine learning

- Action or next move being taken based on analytics

AIOps is the power to process a plethora of alerts for events, evaluate them quickly, derive insights, and identify incidents as they emerge before they develop into severe outages.

The “black box effect” is one of the main problems in AI. This has the effect of fostering user mistrust and slowing adoption because machine learning algorithms and their instructions are not transparent. This problem can be solved by using AIOps event correlation tools since they offer transparency, testability, and control. Users of the software may be able to build or alter correlation patterns, as well as view them and test them before implementing them in real-world settings.

Event correlation will provide pattern-based predictions and the identification of root causes that individuals miss as AIOps develops. Artificial intelligence-driven event correlation solutions will just plug in and instruct incident managers on how to proceed.

Analyst Recommendations on AIOps

The AIOps platform market was pegged by Gartner Research at $300 to $500 million annually in a market analysis from November 2019. 40% of DevOps teams would reportedly add AIOps technologies to their toolkits by 2023, according to their prediction.

The analysts at Gartner advised businesses to implement AIOps gradually. Events classification, correlation, and anomaly detection should be the first important applications that are implemented. They can employ technologies over time to shorten outage times, improve IT service management, become proactive in reducing impact, assess the value of patterns, and eliminate false alarms.

In its review of the AIOps market for 2020, GigaOm discovered that products on the market cover a range of AI adoption and forecasted a consolidation of vendors. According to GigaOm, many event correlation solutions have tacked on AI as an afterthought. Therefore, businesses must thoroughly examine the offers and comprehend all of their features, including compatibility, which has been a challenge for some tools. The decision between an on-premises or cloud-native, on-demand model is another factor.

Misconceptions about Event Correlation

There are various differences among the instruments that come under the event correlation category, as GigaOm pointed out. However, some misunderstandings are widespread.

Real-Time Processing: According to many users, machine learning enables event correlation software to process and correlate new events in real time. Since it requires significant increases in computer power and breakthroughs in AI, no vendor currently offers this capacity.

Anomaly Detection: Users frequently misunderstand how anomaly detection and event correlation are related. Tracking and observability tools that glance at a single, isolated metric over time and can identify when this measure enters an anomalous state perform anomaly detection. Tracking and observability tools create events indicating the discovery of abnormalities when they do so. One of the data streams fed into the event correlation machine is this output. Anomaly detection is not currently a function of any event correlation solutions.

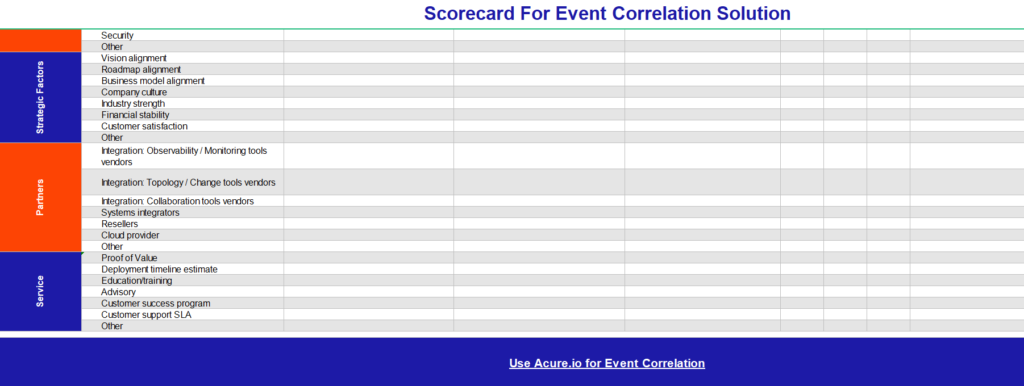

How to Choose the Ideal Event Correlation Tool for Your Business

Your IT Ops efforts can be made to produce higher business value with the help of the appropriate event correlation tool. But it might be challenging to determine whether the product is the greatest fit for your needs due to competing claims and complex technology.

A list of important characteristics and capabilities is provided below. You can compare providers based on many criteria that are weighted according to their significance to your business.

1. User Experience 👨💻

- Convenience and safety of access: The ideal event correlation tool will enhance the user experience with ease of use to safely access business data.

- Intuitive navigation: Active event correlation tools should make the user experience better with an intuitive interface that helps navigate through the issues and come up with solutions smoothly.

- A cutting-edge, clear user experience: The ultimate goal of any tool should be to improve user’s experience and an intuitive interface will help with that.

- Integrated console: A single pane of glass to prevent incidents

- Native analytics: Simple to install and comprehend

- Easy integration of third-party analytics with best-in-class BI solutions

2. Functionality ⚙️

- Sources of data ingested: It should be easy and quick to navigate the sources of the data collected to understand the issue and offer a solution immediately.

- Platform for hosting data streams: Helps gather multiple data streams to detect a pattern in all of them.

- Correlated event types: Since all the event types will be different, the tool should be able to recognize multiple events with a single functionality.

- Interpretation and improvement of data to provide the best experience to the end users without any bugs or issues.

- Correlation methods used should be varied to identify different patterns and root causes of the event failures.

- Root cause analysis that is fully automated to avoid wasting time on manual operations.

- Ability to drill down and adjust root causes.

- Ability to view incidents in relation to topology and surroundings to determine the best solutions for that region.

3. Machine Learning And Artificial Intelligence 🦾

- Automation at level 0 turns manual activities into automated workflows to accelerate incident response.

- Scalability: The tool should be able to scale the run-time correlation engine to monitor the cloud computing environment.

- Performance that is agile-friendly to help teams offer value to their customers without consuming a lot of their time.

- The ability to combine all event feeds using existing tools and integration technology.

- Integration technology and the capacity to include all event feeds without the cost of consultants.

- The ability to quickly connect all event feeds and integration technology.

- Extensibility to ingest monitoring alerts, alarms, and other event indications.

- Security to keep the data and data sources safe from fraudulent incidents.

4. Strategic Aspects 📈

- Business Model Harmony: For identifying patterns that have common go-to-market, vulnerability, value-sharing, and commercial features

- Vision Alignment to help achieve a win-win situation for both concerned parties.

- Roadmap Alignment will help in achieving the goals of the company by ensuring that all the efforts are directed towards achieving that goal.

- Organizational Behavior: Helps the management make sense of the events and understand what changes should be made at the organizational level.

- Monetary security: The tool should be able to recognize which resource is a money-pit and which solution will help save money.

- Customer satisfaction: The end goal of any company is to achieve customer satisfaction and the event correlation tool should be able to solve any issues that customers face.

5. Partners 🤝

- Observability and monitoring tools integration vendors.

- Tools for the integration of topology and change vendors.

- Tools for collaboration and integration vendors.

- System integrators that link all the applications that could be used in solving the issues.

- Cloud service provider

- Resellers

6. Service 🧰

- Proof of Value: Proving value in the eyes of your customer by dealing with problems they face in a quick and efficient manner.

- Training/education: The learning curve of the tool should be minimum with tutorials and training that help understand the features and its advantages.

- Advisory for more effective intrusion detection

- Customer support SLA

- Customer success program

Download scorecard for event correlation solution!

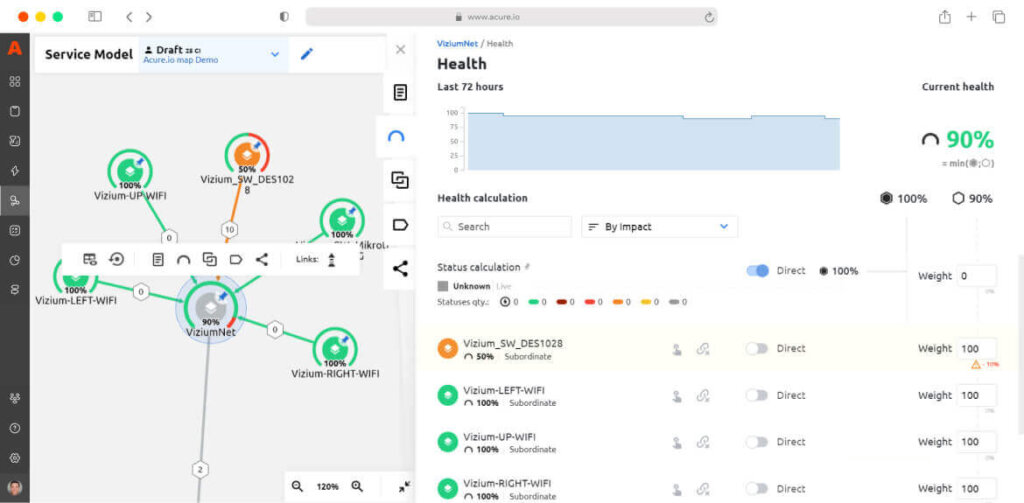

Why Companies Choose Acure For Event Correlation

Acure is a simple yet powerful AIOps platform for event correlation and deduplication. It helps reduce the noise level of events coming from different monitoring systems by 70% and increases the productivity of IT Ops. Deduplication and correlation tools prevent incidents from reoccurring and allow you to prioritize alerts, which allows the IT Ops team to focus only on important tasks.

Acure developers are constantly listening to customers and upgrading the platform according to their wishes. So far, synthetic triggers are used for event correlation. Acure triggers process events received via the monitoring system. It is possible to create a trigger from a template or write it from scratch using scripts written in the Lua language. However, a platform update is planned soon: synthetic triggers will be replaced by signals driven by scripts on a low-code engine that is already used for automation scenarios. This is crucial for systems with a dynamic environment.

Event correlation in Acure is also topology-based. The topology graph displays the whole IT environment like a tree with links between each configuration item. You can see the health and state of the IT complex and each item individually.

And last but not least, Acure is absolutely free with 5 GB daily data, unlimited scenarios, configuration items and users.

Want to see how event correlation works in Acure in practice? Here is our event correlation & noise reduction use case for your IT department.